In the last post [indications…], I started out by objecting to the FDA Advisory Panel’s endorsement of an indication for Vortioxetine [Brintellix®] in Cognitive Dysfunction in Major Depressive Disorder on the grounds of obvious Indication Creep [the well known marketing strategy of adding indications to allow misleading advertising]. But as I looked into the articles themselves, my objection broadened. So besides just objecting to just Indication Creep, I think their analysis is scientifically flawed. Those articles are available on-line and fully referenced in indications….

- AM

- PM

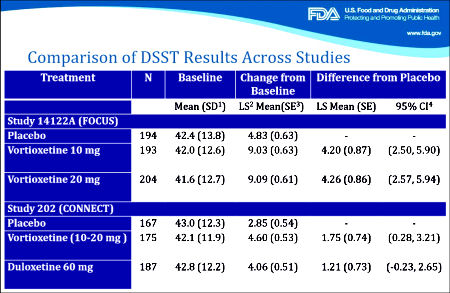

The second FDA presentation needs a look. It documents the extreme persistence of Takeda/Lundbeck in pursuing this indication [working with the FDA]. And towards the end, it has a summary of the specifics with the Takeda/Lundbeck papers. In my opinion, they’re off the mark, but it’s a good description of the mark I think they missed. Their argument hinges on the results of the DSST [Digit Symbol Substitution Test] from the two studies shown in this summary slide from the FDA presentation:

[click image for the original]

In the first study [FOCUS, 2014], there were three groups, Placebo and two different doses of Vortioxetine. The One Way Analysis of Variance [Omnibus ANOVA] is significant at p<0.001. The pairwise comparisons are significant for both doses at p<0.001 with Cohen’s d=0.487 and 0.479 for each dose, both in the moderate range. The pairwise comparison of the two doses is not significant at p=0.945. However, in the second study [CONNECT, 2015], things were not so rosy. The Omnibus ANOVA was 0.062 which is not significant. The pairwise comparisons are p=0.021 for Vortioxetine versus Placebo, p=0.104 for Duloxetine versus Placebo,  and p=0.463 for Vortioxetine versus Duloxetine. The Cohen’s d Strength of Effect was 0.250 for Vortioxetine versus Placebo [weak] and 0.173 for Duloxetine versus Placebo [nil]. In both analyses, they skipped the Omnibus ANOVA, which is a prerequisite to validate even running the pairwise comparisons. So the second study did not reach statistical significance when fully analyzed AND the Strength of Effect was near trivial. In addition, using another method appropriate for datasets with more than two groups, the Tukey’s HSD [Honest Statistical Difference] test, there was no significance found: PBO vs VTX p=0.055, PBO vs DLX p=0.235, and VTX vs DLX p=0.743. The graphic on the right compares the DSST Mean Differences with 95% Confidence Intervals from the Tukey HSD test in these trials.

and p=0.463 for Vortioxetine versus Duloxetine. The Cohen’s d Strength of Effect was 0.250 for Vortioxetine versus Placebo [weak] and 0.173 for Duloxetine versus Placebo [nil]. In both analyses, they skipped the Omnibus ANOVA, which is a prerequisite to validate even running the pairwise comparisons. So the second study did not reach statistical significance when fully analyzed AND the Strength of Effect was near trivial. In addition, using another method appropriate for datasets with more than two groups, the Tukey’s HSD [Honest Statistical Difference] test, there was no significance found: PBO vs VTX p=0.055, PBO vs DLX p=0.235, and VTX vs DLX p=0.743. The graphic on the right compares the DSST Mean Differences with 95% Confidence Intervals from the Tukey HSD test in these trials.

Things like skipping the omnibus ANOVA, outcome switching, or failing to correct for multiple variables are all too common in the clinical trials of pharmaceuticals [all three were present in our examination of Paxil Study 329]. While you can even find articles that support such practices, that’s not what’s in the statistics books, and you sure don’t want to do that on your statistics final exam if you want to pass. In fact, my insistence on playing by the book opens me up to criticism of bias. My take on these debates is that it tells us how close to the wire many of these trials have been, in spite of the fact that statistical significance is the weakest of our tools to evaluate drugs. Effect sizes, whether measured by the Standardized Mean Difference [Cohen’s d, Hedges g] or simply the Difference in the Means as in the above right figure, is a better choice for approximating clinical significance. Actually, simple visual inspection itself isn’t half bad. Both the smallness of the MEAN DIFFERENCE and the difference between the studies are readily apparent [see 4. and 5. in this comment]. The graph also shows something else. One thing we’ve learned from the clinical trials over and over is that replication is perhaps our most powerful tool for evaluating efficacy. And from the graph, it’s clear that the CONNECT study did not replicate the DSST findings from the FOCUS trial.

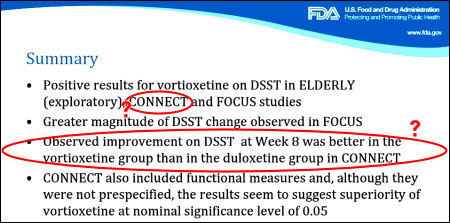

The weaknesses in this application are particularly important because the Takeda/Lundbeck sponsors are asking for not only an approval of their product, they’re asking the FDA to create an entire new indication for just that purpose – Cognitive Dysfunction in Depression. So why did 8 out of 10 members of the Advisory Group vote for approval? I wasn’t there so I don’t know, but I can speculate that they bought the glitz. The sponsors have made a five year long full court press as described in the afternoon FDA presentation above [FDA Presentations]. The National Academy of Science Workshop at the Institute of Medicine was loaded to the gills with PHARMA friendly and Translational Medicine promoting figures, the author of the CONNECT study being the sole presenter on the Effects of Pharmacological Treatments on Cognition in Depression. And then be sure to take a look at the slides from the loaded FDA hearing presentations of a KOL-supreme [Madhukar Trivedi, M.D. Presentation] and the sponsors [Takeda Presentations]. Finally, in spite of the generally skeptical review presented by the FDA [FDA Presentations], the final slide was surprisingly concilliatory [my objections are marked in red, particularly the third one that was not statistically significant, even by their own testing – p=0.463]:

[click image for the original]

Sorry, the comment form is closed at this time.