Clinical Trials of various sorts are intended to be the final word in evaluating both the safety and the efficacy of medications. In psychiatry, they’re more complicated because the outcome parameters are from the subjective reporting of either observers or subjects converted to objective data by rating forms and checklists. Unfortunately, they’ve moved to center stage as a major focus all sorts of research misconduct. The problems of conflict of interest are rampant and a major Achilles Heel is in the analysis of the raw information. There are so many clear examples of the manipulation of the analytic tools of science in the service of distortion that the entirety of the psychiatric clinical trial literature has come under suspicion.

There are nodes all along the chain of steps in clinical trials where bias might effect the outcome, but most of the attention focuses on what happens after the data has been collected. The stakes are high, and it’s in that netherworld between the time the raw results are reported and the time the paper is submitted the where distortions break out in epidemic proportions. Critics are limited to the written word, and can only use indirect means to find evidence that the raw results have been inappropriately manipulated.

One recent example embodies the controversy about data transparency. Dr. Robert Gibbons et al published two papers in the Archives of General Psychiatry last year addressing the efficacy and safety of the SSRIs in depression. They did a meta-analysis of Eli Lilly’s trials of Prozac in children and adults and Effexor in adults only [if you’re not familiar with these papers, put "Gibbons" in the search engine of this blog for more than you’d ever want to know]. A number of people cried foul, and submitted letters to the publishing journal. After a saga of back and forth negotiations with the editor, two of the letters were finally published recently, along with Gibbons et al’s responses. My letter was published only on this blog [an anatomy of a deceit 4…].

Several things: In the paper and in numerous media outings afterwards, Dr. Gibbons repeatedly made the point that his meta-analysis would not have been possible if Eli Lilly hadn’t graciously allowed him access to the raw data for all of their Prozac Clinical Trials – a unique opportunity. His meta-analysis involved complex statistical methods with innumerable references to parameters of data inclusion or exclusion which were impossible to follow, particularly with the paucity of data included in his papers.

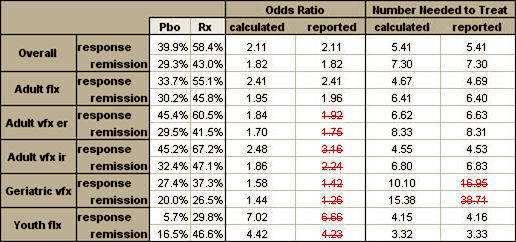

Moreover, there is computational error. Only 4 of 12 stated odds ratios for treatment benefit can be confirmed from the response and remission data.2 Two of 12 number-needed-to-treat computations are seriously inaccurate (16.95 Gibbons et al vs 10.1 actual; 38.71 Gibbons et al vs 15.38 actual). The inarguable error of these straightforward computations puts in doubt the soundness of the authors’ very complex multivariate statistical computations.

Finally, with respect to the “computational error” in our second article on the benefits of antidepressant treatment, we clearly state that for the analysis of response and remission, the results of the analysis were based on “a mixed effects logistic regression model adjusting for study.” It is not possible for anyone [including Dr Carroll] to replicate these computations, which require the individual study–level data. Dr Carroll’s discrepancy for the 2 number-needed-to- treat estimates was because we based them on the logtime [sensitivity analysis] rather than the linear-time model. The estimates of number needed to treat of 10.10 [response] and 15.31 [remission] are the correct estimates for the linear-time model and also show the reduced efficacy in elderly individuals relative to youths and adults.

With Dr. Gibbons’ widely publicized articles reanalyzing already published trials, the only justification for doing the meta-analysis in the first place was that he had access to individual study–level data. The way he explained getting different results from others, or even those analyzing these same studies first time around, was his access to individual study–level data. And now he’s claiming that the only possible way to check his work is to have access to individual study–level data. I agree with him, and that’s the exact reason that the push for data transparency in Clinical Trials is essential and gaining steam. In fact, in this pair of articles, Robert Gibbons is a powerful ombudsman for the argument of Ben Goldacre, Fiona Godlee, Healthy Skepticism, Glenis Willmott, AllTrials, and almost anyone else who isn’t entangled with the Academic·Pharmaceutical Complex.

You make an excellent point – coöpting Gibbons in the cause of the AllTrials petition.

As for the rejoinder by Gibbons to our charge of computational error, who does he think he is fooling (besides the editor of JAMA Psychiatry)? His voodoo mathematical explanation might look possible were it not for the fact that most of our computations in your Table above did align with his! Why did the voodoo fail in all those cases of agreement? It doesn’t pass the straight face test.

I am gobsmacked that his response letter was published, and that John Davis and John Mann signed on to it.

I ran across this article today

Scientists of all sorts increasingly recognize the existence of systemic problems in science, and that as a consequence of these problems we cannot trust the results we read in journal articles. One of the biggest problems is the file-drawer problem. Indeed, it is mostly as a consequence of the file-drawer problem that in many areas most published findings are false.

Consider cancer preclinical bench research, just as an example. The head of Amgen cancer research tried to replicate 53 landmark papers. He could not replicate 47 of the 53 findings.

http://alexholcombe.wordpress.com/2012/08/29/protect-yourself-during-the-replicability-crisis-of-science/

The fact that the push is being felt throughout the scientific research community may help to put pressure on the psychiatric research and let them save a little face at the same time.

“Gobsmacked.” Now that is truly a Sunday NY Times crossword puzzle word. I shall file that away for future reference. Thanks Dr. C.

And here we have admiring reference to Gibbons, 2012 http://blogs.plos.org/mindthebrain/2012/12/26/the-antidepressant-wars-a-sequel-how-the-media-distort-findings-and-do-harm-to-patients/ as an avatar of good information for clinical practice.