Posted on Monday 11 April 2016

In a psychotherapy career, you learn some odd things – things you didn’t even know were there to learn. One important one is that we all do wrong and hurtful things that cause trouble in both our own lives and the lives of people around us. And if you look hard enough in the mirror, you see your own blemishes and it’s important to address them too. As a therapist, often the most difficult task is helping people look at their own shortcomings constructively, and coming to grips with the negative things they’ve done themselves. It’s not a question of getting "off the hook," it’s about cleaning up your own act going forward. In the Twelve Step programs, it’s occupies a number of their steps. But it’s a piece of any recovery enterprise – and you don’t get there by sweeping things under the rug.

My complaint about the Institute of Medicine Reports and the International Committee of Medical Journal Editors article is that they never say why Data Transparency is such a hot-potato issue. Why are we even talikng about this? Yet we all know the answer. The pharmaceutical industry, the third party payers, and a way-too-big segment of the medical profession has behaved very badly and the clinical trials of medications sits in the middle of that bad behavior. There has been corruption small and large, and a solid piece of re·form is vitally needed. That’s why this is on the front burner – the sins of our fathers [and our father’s children AKA ourselves].

I think of Jeffrey Drazen, editor of the world’s first line medical periodical, the New England Journal of Medicine, as a wolf in sheep’s clothing. In spite of having his name on the three documents laying out a plan for Data Transparency, he uses his influential editorial page as a bully pulpit for only one side of the conflict. The month after the first IOM report in 2014 he wrote:

I think of Jeffrey Drazen, editor of the world’s first line medical periodical, the New England Journal of Medicine, as a wolf in sheep’s clothing. In spite of having his name on the three documents laying out a plan for Data Transparency, he uses his influential editorial page as a bully pulpit for only one side of the conflict. The month after the first IOM report in 2014 he wrote:by Jeffrey M. Drazen, M.D.New England Journal of Medicine. 2014 370:662… I am especially eager to receive feedback from the biomedical community about one issue in particular of the many considered in the report. At the completion of a research study or clinical trial, a first report is often published. Usually, this report contains the key findings of the study but only a small fraction of the data that were gathered to answer the scientific or clinical question at hand. To what extent and for how long should the investigators who performed the research have exclusive access to the data that directly support the published material? And should the full study data set be subject to the same timetable? Open-data advocates argue that all the study data should be available to anyone at the time the first report is published or even earlier. Others argue that to maintain an incentive for researchers to pursue clinical investigations and to give those who gathered the data a chance to prepare and publish further reports, there should be a period of some specified length during which the data gatherers would have exclusive access to the information…

by Jeffrey M. Drazen, M.D.New England Journal of Medicine. 2015 372:201-202.

… With whom will data be shared? When they register a trial, investigators will need to indicate whether their data can be shared with any interested party without a formal agreement regarding the use of the data, only with interested parties willing to enter into a data-sharing agreement, or only with interested parties who bring a specific analysis proposal to a third party for approval. It is possible that investigators could choose to share their data with different groups at various times. For example, data might be shared for the first year of availability only with parties who specify their analysis plan but be shared more widely thereafter…

by Jeffrey M. Drazen, M.D.New England Journal of Medicine. 2015 372:1853-1854.May 7, 2015

Over the past two decades, largely because of a few widely publicized episodes of unacceptable behavior by the pharmaceutical and biotechnology industry, many medical journal editors [including me] have made it harder and harder for people who have received industry payments or items of financial value to write editorials or review articles. The concern has been that such people have been bought by the drug companies. Having received industry money, the argument goes, even an acknowledged world expert can no longer provide untainted advice. But is this divide between academic researchers and industry in our best interest? I think not — and I am not alone. The National Center for Advancing Translational Sciences of the National Institutes of Health, the President’s Council of Advisors on Science and Technology, the World Economic Forum, the Gates Foundation, the Wellcome Trust, and the Food and Drug Administration are but a few of the institutions encouraging greater interaction between academics and industry, to provide tangible value for patients. Simply put, in no area of medicine are our diagnostics and therapeutics so good that we can call a halt to improvement, and true improvement can come only through collaboration. How can the divide be bridged? And why do medical journal editors remain concerned about authors with pharma and biotech associations? The reasons are complex. This week we begin a series of three articles by Lisa Rosenbaum examining the current state of affairs…

by Dan L. Longo, M.D., and Jeffrey M. Drazen, M.D.New England Journal of Medicine. 2016; 374:276-277.… However, many of us who have actually conducted clinical research, managed clinical studies and data collection and analysis, and curated data sets have concerns about the details. The first concern is that someone not involved in the generation and collection of the data may not understand the choices made in defining the parameters. Special problems arise if data are to be combined from independent studies and considered comparable. How heterogeneous were the study populations? Were the eligibility criteria the same? Can it be assumed that the differences in study populations, data collection and analysis, and treatments, both protocol-specified and unspecified, can be ignored? A second concern held by some is that a new class of research person will emerge — people who had nothing to do with the design and execution of the study but use another group’s data for their own ends, possibly stealing from the research productivity planned by the data gatherers, or even use the data to try to disprove what the original investigators had posited. There is concern among some front-line researchers that the system will be taken over by what some researchers have characterized as “research parasites”…

So Drazen is a prominent and influential member of the Committees setting the policy for Data Transparency going forward who hardly represents a neutral position. And it’s not like the industry side isn’t represented. For example, the IOM Activity Sponsors:

| Activity Sponsors

|

||

|

• Bayer

|

• Sanofi

• Takeda

|

|

It would be foolish not to be concerned that by the time Data Transparency becomes a reality, it will be severely eroded and become as much a failed reform as previous attempts. For example, the Clinical Trials.gov Results Database has been around for years, but industry and academia just ignored it even when it was required. We need a solid policy with teeth in it, and Jeffrey Drazen is not the guy for that kind of work. I started with comments about the need to look in the mirror as part of any recovery. He’s advocating just the opposite, ignorng the very problems that we’re trying to solve.

Grants, Advisory Committees, or Speakers Bureaus. And that’s what Drazen is talking about, "academician$ who are receiving per$onal money from the pharmaceutical companie$". He’s implying that they can render editorial or review article opinions unbiased by the fact that they are being paid in some form or another by the manufacturer of the drugs being reviewed. It’s not the dialog between academia and industry that’s in question, it’s the money. And his opening sentence …

Grants, Advisory Committees, or Speakers Bureaus. And that’s what Drazen is talking about, "academician$ who are receiving per$onal money from the pharmaceutical companie$". He’s implying that they can render editorial or review article opinions unbiased by the fact that they are being paid in some form or another by the manufacturer of the drugs being reviewed. It’s not the dialog between academia and industry that’s in question, it’s the money. And his opening sentence … But following a series of well-publicized feuds with prominent medical researchers and former editors of the Journal, some are questioning whether the publication is slipping in relevancy and reputation. The Journal and its top editor, critics say, have resisted correcting errors and lag behind others in an industry-wide push for more openness in medical research. And dissent has been dismissed with a paternalistic arrogance, they say.

But following a series of well-publicized feuds with prominent medical researchers and former editors of the Journal, some are questioning whether the publication is slipping in relevancy and reputation. The Journal and its top editor, critics say, have resisted correcting errors and lag behind others in an industry-wide push for more openness in medical research. And dissent has been dismissed with a paternalistic arrogance, they say. they were the Pueblo and other Sacred Clowns. In classic Greek Tragedy, it was often the chorus. Such figures can be sarcastic, even openly hostile, iconoclastic, and can generally deliver the cold hard truth [in a light-hearted way]. I think of John Ioannidis as occupying such a place in Medicine.

they were the Pueblo and other Sacred Clowns. In classic Greek Tragedy, it was often the chorus. Such figures can be sarcastic, even openly hostile, iconoclastic, and can generally deliver the cold hard truth [in a light-hearted way]. I think of John Ioannidis as occupying such a place in Medicine.

we were told Conflict of Interest was a pejorative term and should be changed to Confluence of Interest [in a bid to suggest something like synergy]. While our collective eyes rolled at the time [see

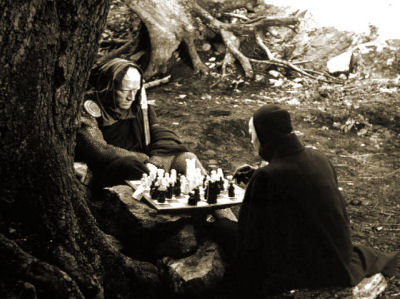

we were told Conflict of Interest was a pejorative term and should be changed to Confluence of Interest [in a bid to suggest something like synergy]. While our collective eyes rolled at the time [see  I doubt most of us would say it that way ["unlucky"]. There was a surprising-to-me absence of attention to the actual medical benefit or risk – mainly comments on success or failure of various gambits. I’m not being hyper-moral here, business sites are about business after all. It was just a bit jarring – as was something else. Much of this literature is about strategies. It reads like a huge chess game with the various moves and gambits cataloged, and sometimes relished. In the case of Lu AF35700, Lundbeck’s new CEO, Kåre Schultz, is shutting down some of Lundbeck’s R&D, laying off employees, and dropping other candidate drugs to raise the cash for his big bet on Lu AF35700 [AKA rejig]. A 1000 Subject three year International Clinical Trial is apparently going to cost them a mint. Just like the failed bet they made on Brintellix® to be a think-better antidepressant cost them dearly. It’s little surprise they hauled in the uber-KOLs for that FDA hearing.

I doubt most of us would say it that way ["unlucky"]. There was a surprising-to-me absence of attention to the actual medical benefit or risk – mainly comments on success or failure of various gambits. I’m not being hyper-moral here, business sites are about business after all. It was just a bit jarring – as was something else. Much of this literature is about strategies. It reads like a huge chess game with the various moves and gambits cataloged, and sometimes relished. In the case of Lu AF35700, Lundbeck’s new CEO, Kåre Schultz, is shutting down some of Lundbeck’s R&D, laying off employees, and dropping other candidate drugs to raise the cash for his big bet on Lu AF35700 [AKA rejig]. A 1000 Subject three year International Clinical Trial is apparently going to cost them a mint. Just like the failed bet they made on Brintellix® to be a think-better antidepressant cost them dearly. It’s little surprise they hauled in the uber-KOLs for that FDA hearing.