Posted on Sunday 4 December 2016

NETWORK META-ANALYSIS: John Ioannidis

Let’s face it – for as much as Congress and the evening news pundits push us to look forward to bold new medical breakthroughs, for a lot of us, this is a time for looking back over where we’ve been and cleaning up a lot of misinformation and garbled, deceitful science. And while Stanford’s John Ioannidis recurrently warns us about sloppy/deceitful research [Why Most Published Research Findings Are False] and overdoing the Meta-Analyses and Systemic Reviews [The Mass Production of Redundant, Misleading, and Conflicted Systematic Reviews and Meta-analyses], he’s also an important resource for anyone interested in looking through the Clinical Trial Retrospectoscope. A given meta-analysis might address all the comparable studies of a single drug, or further, all the drugs aiming at the same target [using the same metrics]. A Network Meta-Analysis goes further. By using the Comparator Drugs in the various RTCs, it aims to create a heirarchy of relative efficacy. Obviously, the further one gets from the primary data, the more complex and abstract the mathematical operations become, and the more room there is for error, gibberish, or both. Ioannidis is right there at hand to at least outline the pitfalls and methodology of such an undertaking in an article understandable by mortals [Demystifying trial networks and network meta-analysis].

If there is a maestro of meta-analyses for the psychiatric drugs, it’s Oxford’s Andrea Cipriani, longtime contributor to the Cochrane Collaboration Systemic Reviews. This summer, his group published a Network Meta-Analysis of the SSRI/SNRI drugs in child and adolescent depression [Comparative efficacy and tolerability of antidepressants for major depressive disorder in children and adolescents: a network meta-analysis.][see also my antidepressants in kids? a new meta-analysis…] that concluded: "When considering the risk-benefit profile of antidepressants in the acute treatment of major depressive disorder, these drugs do not seem to offer a clear advantage for children and adolescents. Fluoxetine is probably the best option to consider when a pharmacological treatment is indicated."

If you read this blog from it’s psychiatry origins [2009] to the present, the over-riding focus has been on RCTs [Randomized Clinical Trials] of Psychiatric Drugs in the post-Prozac era. And of those, Paroxetine [Paxil] Study 329 would stand out as something of a near obsession [Efficacy of Paroxetine in the Treatment of Adolescent Major Depression: A Randomized, Controlled Trial]. I make no apology for that. As a clinician, I am a psychiatrist and psychoanalyst, and practiced psychotherapy throughout my career. So case study and n=1 are very much my cup of tea. But there are other reasons this study is so prominantly mentioned. It is virtually the only study where we have the raw data, and the internal documents that allow it to be investigated thoroughly. When we did our RIAT reanalysis [Restoring Study 329: efficacy and harms of paroxetine and imipramine in treatment of major depression in adolescence], I was focused on the efficacy analysis. That story has been often told here so I’ll move on to my point. They reported positive results in a negative trial by changing the Outcome Variables from those declared in the a priori Protocol. And spending several years immersed in that reanalysis taught me how essential pre-registration is in conducting a truly scientific study.

by Sarah Barber and Andrea CiprianiAustralian & New Zealand Journal of Psychiatry. 2016 Nov 17.

[Epub ahead of print]

…According to the results from this study, psychiatrists who prescribe paroxetine to a 15-year-old with major depression are practising evidence-based medicine. Or at least they were, until a major re-analysis in 2015 found no difference between paroxetine and placebo when only outcome measures pre-specified in the original study protocol were considered [Restoring Study 329: efficacy and harms of paroxetine and imipramine in treatment of major depression in adolescence]. The statistically significant findings in Study 329 original article, it emerged, relied on four new outcome measures, introduced post hoc by the sponsor. It is known that pharmaceutical industry-funded studies are more likely to show favourable efficacy results for investigational drugs than independent trials. Study 329 demonstrates how outcome-switching and selective reporting of results can be used to manipulate results…

This is an important issue, which is at the heart of the debate in the scientific literature about evidence based medicine and, therefore, evidence-based practice. Ben Goldacre and colleagues at the Centre for Evidence-Based Medicine at the University of Oxford have been systematically investigating the issue of outcome-switching in trials published in top medical journals and sharing their results on the website COMParetrials.org. They have found that poor trial reporting often masks inadequate design, and studies such as 329 are just examples of a widespread practice in all areas of medical research, not only psychiatry. This is despite the endorsement by prominent medical journals of the Consolidated Standards of Reporting Trials [CONSORT] Statement, a guideline for authors to prepare reports of trial findings, facilitating their complete and transparent reporting and aiding their critical appraisal and interpretation. Of course, good quality trials may be poorly reported. However, transparent reporting on inadequate trials will reveal deficiencies in the design if they exist, as in the case in Study 329. Among other things, CONSORT requires there to be "completely defined pre-specified primary and secondary outcome measures" and clear reporting of "any changes to trial outcomes after the trial commenced, with reasons". Both are absent in Study 329…

While we fully support the efforts of these groups, we wish to address the needs of practicing clinicians, who want to know which is the most reliable estimate of paroxetine in young people with major depressive disorder. Individual trials and also pairwise meta-analyses are not enough. Network meta-analysis has the great advantage over standard meta-analysis of comparing all treatments against each other [even if there is no trial data comparing the interventions directly] and ranking them according to their relative efficacy or tolerability with a precise degree of confidence. Another important advantage of network meta-analysis is that the effects of sponsorship bias are distributed [and therefore diluted] across the network. The case of Study 329 is a clear demonstration of this added value. A recent network meta-analysis has compared all antidepressants for major depression in children and adolescents [Comparative efficacy and tolerability of antidepressants for major depressive disorder in children and adolescents: a network meta-analysis]. The search strategy, though, was completed in May 2015, a few months before the publication of the re-analysis of study 329. As a result, Study 329 was included with the original 2001 data biased towards paroxetine; however, the overall results from the network meta-analysis showed that paroxetine was not statistically significantly different from placebo. This is because by combining direct with indirect evidence, the bias in studies that favour the investigational drug over placebo is mitigated by the findings of other indirect comparisons in the network. Interestingly, the final results of the network meta-analysis showed that, among all antidepressants, only fluoxetine was significantly more effective than placebo. This is probably a robust result, because it is consistent with the direct and indirect evidence comparing fluoxetine with all the other compounds in the network.

Even using the unrestored results from Study329, Paxil didn’t make the grade in the Network Meta-Analysis – a nice confirmation of our results. I also found the Prozac result interesting. It was approved for adolescents before the Black Box Warning and Lily fought [unsuccessfully] to keep it off the Prozac label. Prozac remains the main drug with FDA Approval for kids. A later Lexapro/Ccelexa FDA Approval has long been questioned, particularly recently since The citalopram CIT-MD-18 pediatric depression trial: Deconstruction of medical ghostwriting, data mischaracterisation and academic malfeasance came out. I’ve personally always suspected that the Prozac result was suspicious – thinking it simply made it under the wire before people caught on that so many studies were being jury-rigged. But this independent confirmation by Network Meta-Analysis suggests that it was indeed a valid result after all. Why it should be any different than the others doesn’t make much sense, but there it is…

The obvious drawback to Meta-Analyses and particularly the Network variety is that they need a lot of trials, so one is well along the path of a drug/class lifespan before such techniques can even be used. Further, one is at the mercy of the pharmaceutical industry’s choice of the Comparators being included in the studies. Bringing up an often unmentioned fact, in most cases, these short-term industry-funded studies conducted for approval and/or commercial purposes are all we really have – the only act in town. In spite of the fact that many, many patients are taking these drugs and staying on them for long periods of time, we still pore over those early brief trials as if they are definitive clinical roadmaps rather than, at best, simply starting places. Until we figures out a way to independently harvest the enormous untapped data pool generated from ongoing clinical usage, these often questionable approval trials retain their power to hold us in their grip [as they have for several decades].

A DDoS or Distributed Denial of Service is an attack against the server which uses a wide range of IP addresses to bombard the server with traffic. We have customized internal tools which mitigate these attacks automatically and they largely go un-noticed by customers. Sometimes, however when these attacks become big enough it can cause websites on the server to become slow or unresponsive for short periods of time. In these cases there are steps that must be taken manually to stop the attack and get our servers running at full speed again. DDoS attacks are not something Just Host can warn customers about beforehand. When they occur please be assured that our team is doing everything possible to restore service as quickly as possible. There are many types of DDoS attacks, however with each possible attack the best plan for our customers is to hold tight and we will restore the same level of service as quickly as possible.

A DDoS or Distributed Denial of Service is an attack against the server which uses a wide range of IP addresses to bombard the server with traffic. We have customized internal tools which mitigate these attacks automatically and they largely go un-noticed by customers. Sometimes, however when these attacks become big enough it can cause websites on the server to become slow or unresponsive for short periods of time. In these cases there are steps that must be taken manually to stop the attack and get our servers running at full speed again. DDoS attacks are not something Just Host can warn customers about beforehand. When they occur please be assured that our team is doing everything possible to restore service as quickly as possible. There are many types of DDoS attacks, however with each possible attack the best plan for our customers is to hold tight and we will restore the same level of service as quickly as possible.

And as the SSRIs were only effective in a third to a half of people with the MDD diagnosis, a new disease was born – Treatment Resistant Depression [TRD]. Then came multiple schemes trying to improve the SSRIs track record, one of which was augmentation with the other new class of drugs, the Atypical Antipsychotics. But the decade didn’t end like it started as the specialty was rife with scandal – Senator Grassley’s exposure of financial corruption among academic psychiatrists in 2008 and a flurry of civil and criminal suits against pharmaceutical companies for a variety of misbehaviors leading to even more exposure.

And as the SSRIs were only effective in a third to a half of people with the MDD diagnosis, a new disease was born – Treatment Resistant Depression [TRD]. Then came multiple schemes trying to improve the SSRIs track record, one of which was augmentation with the other new class of drugs, the Atypical Antipsychotics. But the decade didn’t end like it started as the specialty was rife with scandal – Senator Grassley’s exposure of financial corruption among academic psychiatrists in 2008 and a flurry of civil and criminal suits against pharmaceutical companies for a variety of misbehaviors leading to even more exposure. em abroad from thence upon the face of all the earth: and they left off to build the city. 9 Therefore is the name of it called Babel; because the Lord did there confound the language of all the earth: and from thence did the Lord scatter them abroad upon the face of all the earth.

em abroad from thence upon the face of all the earth: and they left off to build the city. 9 Therefore is the name of it called Babel; because the Lord did there confound the language of all the earth: and from thence did the Lord scatter them abroad upon the face of all the earth. The Bookmobile came every other Saturday. And once the Bookmobile Lady got to know you, she brought wonderful things you didn’t even know existed. Two weeks is a very long time, so there was a shelf with Compton’s Pictured Encyclopedias to fill in the gaps. But neither rivaled the Downtown Library where the selection seemed infinite. When I reached the traveling age, my Saturday Quarter bought either two bus tokens [10¢ each] for a round trip to the Library + a candy bar [5¢], or the Movie Matinee with Serial [10¢] + popcorn [10¢] + a coke [5¢]. Freedom brings some tough choices!

The Bookmobile came every other Saturday. And once the Bookmobile Lady got to know you, she brought wonderful things you didn’t even know existed. Two weeks is a very long time, so there was a shelf with Compton’s Pictured Encyclopedias to fill in the gaps. But neither rivaled the Downtown Library where the selection seemed infinite. When I reached the traveling age, my Saturday Quarter bought either two bus tokens [10¢ each] for a round trip to the Library + a candy bar [5¢], or the Movie Matinee with Serial [10¢] + popcorn [10¢] + a coke [5¢]. Freedom brings some tough choices!

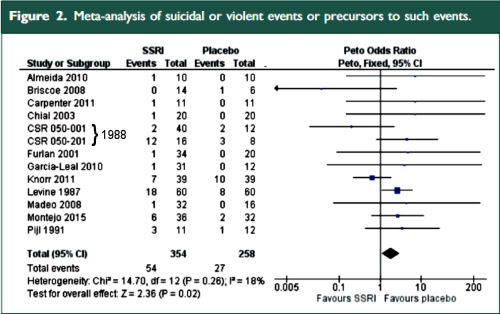

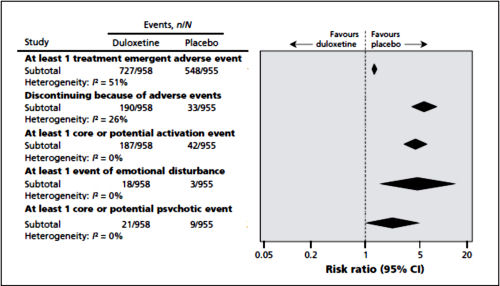

The two papers combined report seventeen blinded clinical trials [scattered over 28 years] with some ~1300 non-depressed subjects on SSRI/SNRI drugs compared with ~1200 placebo controls. The findings of an increased signal for suicidality with these drugs have been traditionally discounted by critics with various rationales because the medications are being given to depressed people [who are suicide-prone anyway]. So here’s a large cohort of studied patients where that explanation obviously won’t fly. And in each case, the forest plots still show that the target adverse events are significantly more prevalent in the drug treatment group than in the placebo controls. The question then becomes, "What were those target Adverse Events?"

The two papers combined report seventeen blinded clinical trials [scattered over 28 years] with some ~1300 non-depressed subjects on SSRI/SNRI drugs compared with ~1200 placebo controls. The findings of an increased signal for suicidality with these drugs have been traditionally discounted by critics with various rationales because the medications are being given to depressed people [who are suicide-prone anyway]. So here’s a large cohort of studied patients where that explanation obviously won’t fly. And in each case, the forest plots still show that the target adverse events are significantly more prevalent in the drug treatment group than in the placebo controls. The question then becomes, "What were those target Adverse Events?"

The classic Psychologist laboratory animal study involves genetically identical rats living in cages divided into two groups – one control group and one with some experimental intervention. Blinded observations of some pre-defined outcome parameter are recorded and the groups compared statistically at the end of the study. By making everything about the groups exactly the same, you can reasonably conclude that any differences in the outcome parameters are caused by the intervention. In another variation, one might have a single group with a control period of observations, then a second period making the intervention – comparing the control period to the experimental period. Whatever the case, the point is to aim for uniform groups with the only difference being the intervention – control vs experimental.

The classic Psychologist laboratory animal study involves genetically identical rats living in cages divided into two groups – one control group and one with some experimental intervention. Blinded observations of some pre-defined outcome parameter are recorded and the groups compared statistically at the end of the study. By making everything about the groups exactly the same, you can reasonably conclude that any differences in the outcome parameters are caused by the intervention. In another variation, one might have a single group with a control period of observations, then a second period making the intervention – comparing the control period to the experimental period. Whatever the case, the point is to aim for uniform groups with the only difference being the intervention – control vs experimental. And then there are the Ethologists who do just the opposite. They often try not to interfere or make their presence known at all. So they sit in trees in the jungle and watch the animals in their natural habitat [or even become a part of it eg Dian Fossey]. Their books tend to be about one individual or a small group – more like a case study or a family study. And the tables, graphs, and statistics aren’t so prominent as they are in the works of the laboratory based scientists. They’re replaced by narrative descriptions.

And then there are the Ethologists who do just the opposite. They often try not to interfere or make their presence known at all. So they sit in trees in the jungle and watch the animals in their natural habitat [or even become a part of it eg Dian Fossey]. Their books tend to be about one individual or a small group – more like a case study or a family study. And the tables, graphs, and statistics aren’t so prominent as they are in the works of the laboratory based scientists. They’re replaced by narrative descriptions.![Adrianus de Groot l1914-2006] Adrianus de Groot l1914-2006]](http://1boringoldman.com/images/degroot.gif) is of course first and foremost suited for “testing”, i.e., the fourth phase. In this phase one assesses whether certain consequences [predictions], derived from one or more precisely postulated hypotheses, come to pass. It is essential that these hypotheses have been precisely formulated and that the details of the testing procedure [which should be as objective as possible] have been registered in advance. This style of research, characteristic for the [third and] fourth phase of the cycle, we call hypothesis testing research.

is of course first and foremost suited for “testing”, i.e., the fourth phase. In this phase one assesses whether certain consequences [predictions], derived from one or more precisely postulated hypotheses, come to pass. It is essential that these hypotheses have been precisely formulated and that the details of the testing procedure [which should be as objective as possible] have been registered in advance. This style of research, characteristic for the [third and] fourth phase of the cycle, we call hypothesis testing research.